The Thermodynamic Roots of Selfhood

Before scientists named the neuron, before they understood electricity as more than lightning or a catfish’s jolt, the question was already there, haunting fire-lit caves and Athenian academies alike: what animates this lump of matter we call a body? Surely, the spark of thought, the vivid inner theater of dreams and desires, the simple fact of feeling like someone, wasn't just… meat. From this primal wonder sprang ideas of the soul – an animating principle, perhaps residing in, but ultimately distinct from, its perishable physical home.

The strength of this intuition suggests ancient roots, perhaps emerging early in evolution as a vital tool for organisms to distinguish self from world. But since most cultures throughout history embraced animism, seeing spirits as interwoven with the material, so natural selection can’t explain why Western culture amplified feelings of distinctness into a metaphysical dualism — the idea that mind and body are different kinds of substance, not explainable in each other’s terms.

Dualism goes back at least as far as Zoroastrianism, and was well established by the time of Plato, whose theory of Forms depicted a higher, perfect realm distinct from flawed material existence, as vividly symbolised in his Allegory of the Cave. Greek philosophy’s tendency to view the mind or soul as immaterial achieved even more cultural influence through its integration into Christian theology, which personified Forms—a synthesis Nietzsche dubbed “Platonism for the masses.”

In more modern times, René Descartes formalized this split, drawing a stark line between thinking substance (res cogitans) and physical substance (res extensa). But this bequeathed a riddle: how could a non-physical mind interact with a physical body? How does a ghost command a machine? Descartes’ own suggestion (the pineal gland) proved unconvincing, but a deeper challenge arose with physics: conservation of energy makes it hard to see how an immaterial mind can influence the physical world without violating fundamental laws.

This apparent impasse spurred alternatives. Perhaps consciousness is merely an observer, a non-causal byproduct (epiphenomenalism). Or could functionally identical beings—philosophical zombies—exist without any subjective experience at all? The mere thought experiment highlights the enigma: subjective awareness feels like something extra, distinct from purely physical processes.

This enduring mystery—why complex information processing should feel like something from the inside—is the modern mind-body problem, which philosopher David Chalmers coined the 'hard problem of consciousness': Why isn't all the brain's complex computation done "in the dark"? The gap between objective neural function and subjective first-person experience seems profound. But as Chalmers also noted (posing the 'meta-problem'), our very bafflement is itself puzzling: why are we so constitutionally inclined to see subjective experience as mysteriously separate, even as neuroscience reveals more of its mechanisms?

By answering the meta-problem, I will argue there is no mystery. Our deep-seated intuition—the pervasive feeling that mind is distinct from physical substance—arises directly from the essential function consciousness performs. In particular, to persist against the constant threat of dissolution, fragile living systems must develop self-awareness, regulating themselves by not only creating predictive models of their own internal dynamics, but distinguishing these from the external world. But fragility guarantees the most predictive self-models transcend physical details. The feeling of being distinct, even non-physical, is then the function of avoiding dissolution; our bafflement — the meta-problem — is necessary to survive.

Because of this, the arguments of this essay won’t fully satisfy. The nagging suspicion that information processing could conceivably happen in the dark won’t fully disappear.

But the intellectual framework used to interpret these feelings is not inevitable. We don’t have to struggle to reconcile the raw feeling of being a self with scientific models of the brain. We can simply update the cultural toolkit that makes mind and matter look like different substances.

This way, we can view the counterintuitive nature of consciousness as an artifact of our constraints as observers, as we now do for quantum mechanics and relativity. These theories are hard to understand because we’re too small/too big1, while consciousness is hard to understand because our fragility creates a thermodynamic imperative to think we exist independently of the physical world.2

This deflationary view is harder to accept than quantum mechanics or general relativity because of our cultural heritage, which amplified then deified the functionally useful self/world boundary. Because of this, we remain culturally attached to a self-aggrandising anthropocentrism that sees our minds as exceptional. We haven’t fully shaken the hierarchical ideas of Plato and Aristotle, and their culmination in the Great Chain of Being, which ranked humans above animals and nature. Indeed, this had such influence that Descartes believed only human minds are conscious, leading to some rather inhumane experiments.3 It’s time for a break with this; time to naturalise the mind and explore its continuities across the tree of life, instead of mystifying it.

Where centuries of philosophical tradition have muddied the waters here, modern technology offers clarity on how something non-physical, like a mind, can relate to a physical substrate.

The Clarity Offered by Modern Technology: Software, Consciousness, and the Laws of Reality

The Software Analogy – A Separate Logical Realm?

You know what I’m thinking of: the magic of computer software. It pauses video streams instantly, teleports game characters across worlds, and makes digital objects appear from nowhere. These actions seem to occur within a logical realm separate from the constraints of everyday physics—energy conservation and locality seem no hurdle here! Of course, we know this is illusory: beneath the surface, every step depends on electrons moving within silicon chips.

Yet, a fascinating disconnect exists: there's no fixed link between a specific software function and the hardware's exact physical state. The same command—like teleporting a character—triggers different complex electrical sequences depending on the CPU (Intel or ARM) or even varying conditions on the same CPU. This flexibility is called multiple realizability: the same function is achievable through different physical means.

Because of multiple realizability, explaining software purely by tracking electron flows isn't just impractical; it misses the point. What causes the outcome is the software's logic, not the specific path of electrons this time. Consider sending a chat message. Clicking "Send" displays the message and transmits it, involving intricate electrical signals. But that specific cascade isn't uniquely necessary; different circuits could achieve the same result. What is necessary and sufficient is the software's logic: the state before clicking (message ready) and the app's rules (clicking "Send" triggers transmission). The hardware simply executes instructions; the software's logic dictates the result. Figure 1, illustrating a function (A causing D) mediated by different hardware states (B or C), captures this: the higher-level logic (A → D) is the more general and relevant explanation.

Figure 1: Multiple Realizability. A function (A causing D) can be physically implemented (mediated) by different hardware states (B or C). Explaining the A -> D transition solely via B or C is too specific; the higher-level software logic (A -> D) provides the more general and relevant explanation. As such, a regulating model will prefer the second explanatory framework.

This relationship offers a powerful analogy for consciousness. The system's function (software) operating according to a logic distinct from its physical components (hardware) seems to mirror the relationship between mind and body. Consciousness, like software logic, appears to operate with a degree of independence from the specific, transient states of the physical brain, giving us the intuition that it is a different substance.

Note, this is not to suggest that standard software possesses intrinsic subjectivity or the feeling of consciousness. That feeling arises from a specialized kind of meta-software—one that actively models itself as distinct from, and under threat by, an external world. While conventional software also follows rules that can be realized by various physical circuits, it does not operate in environments where those circuits are subject to constant, high-entropy perturbation. As a result, it has no need to construct a self-model that treats itself as autonomous from its surroundings—the environment, in its case, is relatively stable. I discuss potential computer consciousness in a dedicated section later.

The Limits of Reductionism and the Dualism Hidden in Physics

The immediate instinctual response to this analogy is often dismissive: "But zoomed out, it's all reducible to physics! There is no need for feelings or distinct mental states!" However, this confident reductionism warrants closer examination. What truly deserves to be called "real"? To claim that only the base physical substrate is real betrays a Platonic bias. Within complex, self-maintaining systems, it is arguably the functional "laws"—the stable patterns resilient to particular circumstances—that have a stronger claim to reality, precisely because they are predictive and persistent. This is logically equivalent to the claim that physical laws are real, unless the claimant admits dualism.

To see why, consider the classic Newtonian paradigm in physics, which software echoes. Given precise initial conditions (like particle positions and momenta) and the transition rules (the laws of physics), the subsequent state of the system is determined. Similarly, given a software state and the program's rules (its code), the next logical state is determined, seemingly regardless of the specific mediating hardware. But let's turn the lens onto the physical laws themselves. When billiard balls collide according to Newton's laws, few bother asking why these laws should transcend the specific initial positions and momenta involved. Why? Because we've unconsciously accepted the notion that these laws exist timelessly as Platonic Forms, untouched by material specifics.

This tacit acceptance reveals the hidden dualism at the heart of traditional physics. It posits immutable, timeless laws as separate from, yet governing, the specific, changing physical conditions. The laws dictate how matter behaves but are not affected by it, implying they cannot be reduced to the material and are therefore a different kind of substance. By reifying physical laws as fundamentally separate entities, then, materialism ironically succumbs to the very dualism it aims to refute. Despite our scientific pride, most of us remain unwitting Cartesian dualists.

The Cosmological Case Study – Laws and Unexplained Conditions

This underlying dualism isn’t mere pedantry, a point made obvious when we examine cosmology. Fundamental physical laws are time-symmetric—a movie of particle interactions run backward still obeys the laws. Yet, our universe exhibits a clear time-asymmetry, most famously the relentless increase of entropy (colloquially disorder, see later section for details). The standard 'laws + initial conditions' framework can only account for this by postulating an extraordinarily specific, incredibly low-entropy initial state for the observable universe—the 'Past Hypothesis'.

But it wouldn’t be far off the mark to say that the ‘Past Hypothesis’ is a euphemism for ‘God Hypothesis’: it is an extremely complex, ad-hoc condition invoked to make the model match reality; God, without personification. If we think carefully, the Christian God came from the dualist intuition that mind and body are separate. In modern language, the Christian God is a super-intelligent programmer who resides outside the simulation; he wrote the rules but is untouched by them. The Past Hypothesis serves the same narrative function: transcendent laws imposed on a material world that started off complex (God was already there).

Symmetry Breaking and Functionalism – A Path Beyond Dualism

But there’s a way out of this dualism! We can propose that laws and initial conditions were not fundamentally independent from the very beginning. There was likely an initial, unified state where they were intertwined (Figure 2). Subsequently, a symmetry break occurred early in the universe's history, leading to the practical independence we now observe, where laws appear timeless and distinct from the conditions they govern. But they aren’t actually independent: without the material world, how would we even know the laws?

Figure 2: A symmetry break at the beginning of the universe is a better explanation for apparent independence of initial conditions and laws than actual independence, since the latter implies the dualism materialism attempts to refute, and on close analysis has logical equivalence with the Christian (Deist) God Hypothesis, itself an artifact of dualism.

But this is just functionalism: what makes something real is not its constitution, but its behaviour.4 Crucially, this proposed symmetry break in physics—the emergence of practical independence between laws and conditions—is logically equivalent to the apparent separation we observe between software and hardware, or mind and body. The point isn't that software or mind belongs to a truly different substance. Rather, a functional or practical independence emerges, allowing the higher level (software logic, mental states) to operate reliably across variations in the lower level (hardware states, neural activity). Just as without material, there would be no physical laws, without brains there would be no minds, and without hardware, there would be no software.5

This perspective gains further weight when we compare the nature of these "laws". We call laws of physics fundamental (“real”) because they obey symmetries, not because they explain everything (as seen above, they don’t explain the initial conditions of the universe). Modern physics makes crystal clear that “laws” express symmetries and invariances, not necessities. As one example, energy conservation6 reflects time-translation symmetry (laws at spatially separate points are the same).7 In the same vein, the functional 'laws' or 'software' modeled by a conscious system represent the invariances crucial for its own persistence and effective functioning, abstracted away from the noisy flux of its physical microstates. Consciousness, viewed as the subjective apprehension of these vital functional constraints necessary for regulation, is conceptually parallel to physical law as the description of symmetry. Why should one level of emergent description, grounded in essential invariance, be considered inherently more 'magical' or less 'real' than the other?

The Thermodynamic Engine of Separation

If this practical independence isn't magic, but the result of symmetry breaking, how does it arise and persist? What makes these functional patterns stable enough to seem separate from their substrate? Answering this requires delving into thermodynamics, particularly as it applies to information and living systems.

Start by differentiating a macrostate (a coarse-grained property, like a gas's temperature) and a microstate (the exact arrangement of molecules at an instant) of a physical system. According to the Second Law of Thermodynamics, disorder—maximum entropy—is the overwhelmingly likely fate of a closed system. Ludwig Boltzmann explained8 this: a disordered macrostate (like gas filling a container) simply corresponds to vastly more possible microscopic arrangements than an ordered one. Entropy quantifies this microscopic disorder.

Claude Shannon generalized this concept to information theory. Shannon entropy measures the uncertainty (or lack of predictability) in a system described by probabilities. For example, colour of a traffic light in the middle of the night at a rural intersection has low Shannon entropy, because we know it will likely be green even if we aren’t at the intersection yet. Boltzmann's physical entropy is, in fact, a specific case of Shannon entropy: it quantifies our uncertainty about the exact physical microstate when we only know the macrostate (like temperature or volume). This powerful insight allows us to treat the orderliness or predictability of informational patterns—like software functions or conscious states (which are, by definition, macrostates)—using the same conceptual framework as physical disorder.

How does this relate to living systems? Living systems are islands of profound order – low-entropy physical structures. They maintain this state by constantly importing order (in the form of energy and structured resources) from their surroundings and exporting disorder (as heat and waste). To sustain this improbable state, they must encode and process information that accurately represents their environment, enabling adaptive responses that actively resist the natural drive toward disorder. Thus, informational order in biology is inextricably linked to physical processes that consume energy and reduce uncertainty.

The Cost of Clarity, Self-Modeling, and Dissipation

But how does abstract informational order maintain its integrity against the noisy physical reality, and what is the energetic cost?

Therefore, Shannon entropy, which at first glance appears to be about abstract knowledge, has a deep connection to Botlzmann entropy, which appears more grounded in physics. Enter Rolf Landauer, who formalised the connection, showing information isn't free. Erasing information—which is essential for maintaining a clean logical state by discarding irrelevant details about the fluctuating microstate, effectively reducing uncertainty about the system's meaningful past—must dissipate energy as heat, inevitably increasing the physical entropy of the surroundings. Maintaining the 'software's' apparent independence and robustness against lower-level noise requires this continuous energy expenditure within an open system. There is a physical price for informational clarity. Figure 8 illustrates this: by restoring internal order, the system “forgets” different pasts caused by perturbations from green atoms, externalising it as greater disorder in the momenta of green external atoms).

Now we can see how this relates to the feeling of the mind's distinctness. For a self-regulating system like an organism, a critical task is predicting its own future internal states to maintain stability (homeostasis). The most effective way to do this isn't via a computationally intractable, moment-by-moment simulation of every particle (microstate). Instead, prediction relies on identifying the invariances in the system's dynamics—the stable, higher-level patterns or functional rules (macrostate) that persist despite the constant churning of the underlying physics. Capturing these invariances yields the most predictive model with the least complexity. This functional logic, the 'software,' remains constant across countless different physical implementations, and is in that sense more real and relevant to the system itself for its own persistence. From the perspective of the organism's own need for self-modeling, this invariant functional level is the effective law governing its persistence. The feeling that mind (the functional logic) is distinct from body (the transient hardware state) arises directly from this functional necessity: survival depends on modeling the invariant rules, not the fluctuating substrate.

The link between information, energy, and entropy becomes even clearer in systems far from thermodynamic equilibrium, like us. Non-equilibrium thermodynamics, particularly through work like Crooks' Fluctuation Theorem and Jeremy England's related proposals on "dissipation-driven adaptation," quantifies the relationship between thermodynamic irreversibility and entropy production. A simple illustration is throwing a ball into the air with air resistance. Unlike in a vacuum, where the ball’s descent would mirror its ascent, air resistance causes the ball to lose energy as heat both going up and coming down. This breaks time-reversal symmetry: the downward path is not a perfect reflection of the upward path because the surrounding air would have to “cooperate,” converging on the ball. According to Crooks’ Theorem, the probability of seeing the exact time-reversed trajectory — where the ball would spontaneously absorb heat from the air and retrace its path upward — is exponentially suppressed by the amount of entropy produced in the forward process. That is, the more dissipation (energy lost as heat), the more statistically unlikely it becomes to witness the reverse. This highlights a key feature of open, driven systems: processes that produce entropy are exponentially favored over their time-reversed counterparts. As a result, there emerges a kind of physical selection pressure for structures that are good at absorbing energy and dissipating it — like living systems — making them more likely to form and persist. This helps explain why physical self-organise into systems better characterised as software, breaking symmetries of the physical world and giving us a sense of as-if dualism.

Consciousness as Actively Maintained Functional Order

Therefore, the software/consciousness analogy is deepened significantly. Their apparent independence from their physical substrate isn't magic, nor is it evidence of a separate substance. It arises because they represent specific, low-entropy informational macrostates that are actively maintained against the constant threat of dissolution and noise. This maintenance requires a continuous, energy-consuming, dissipative process. Erasing irrelevant microstate information to preserve the integrity of the functional macrostate (the software logic, the coherent stream of consciousness) costs energy and dissipates heat, aligning with Landauer's principle. This ongoing physical work, necessary to maintain informational order, may itself be driven by the thermodynamic tendency (as suggested by England) for driven systems to organize in ways that effectively dissipate energy.

The practical independence felt between mind and body, or observed between software and hardware, is thus grounded in the physical necessity of constantly rebuilding and maintaining specific informational patterns. This task, intrinsically linked to the thermodynamic drive of far-from-equilibrium systems to persist, involves dissipating energy to erase noise and maintain functional coherence. This ongoing physical work isn't just a byproduct; it may be the very engine that creates and sustains the practical "symmetry break" we experience as the distinctness of mind, and perhaps underlies our persistent sense of self through time.

II. What Types of Systems Might be Conscious (and Why?)

To understand the thermodynamic foundation of consciousness more concretely, let's examine how four different entities maintain structure against the universe's relentless pressure towards disorder, assessing the likelihoods of maintenance requiring consciousness.

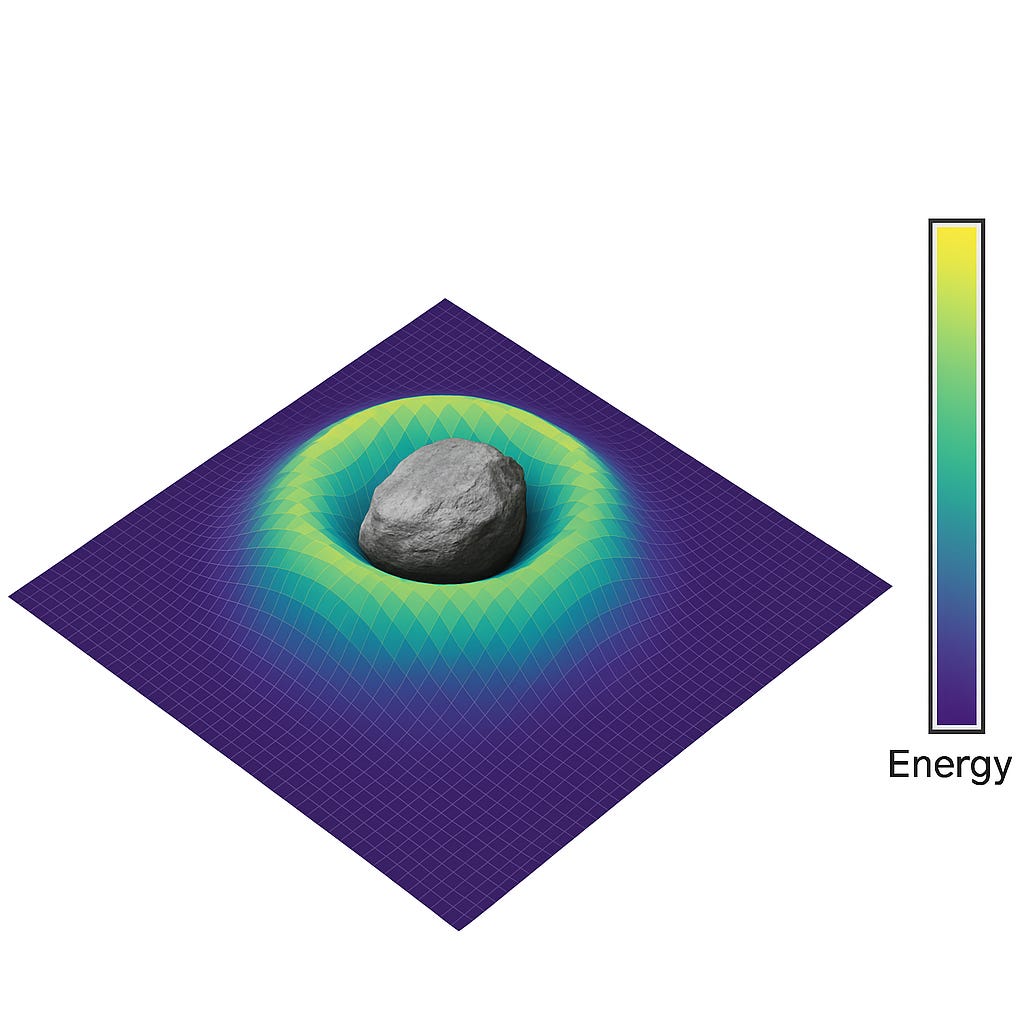

First, consider a simple rock. It possesses a highly ordered, low-entropy structure formed by strong chemical bonds. This internal order is inherently stable; immense energy is required to break these bonds. The rock passively resists the thermal jostling and chemical reactions of the external world primarily through this robust inertia. It has minimal interaction with its environment beyond slow erosion or chemical weathering. High energy barriers to interfering with its chemical bonds means it occupies a deep well on its potential energy landscape (which we can see as a metaphorical artifact of its weight, as in Figure 4), forming a bulwark against the environmental forces trying to pull its high energy apart into dust.

Now picture a single-celled organism, like a bacterium. It too has a complex, low-entropy internal order, but its chemical makeup is far less inert than the rock's. Organic chemistry involves constant reactivity; the energy barrier preventing its dissolution is relatively low. Unlike the rock, it cannot persist passively. It must actively fight decay by constantly regulating its internal state and exchanging energy and matter with its surroundings—"feeding on negentropy," as Erwin Schrödinger put it. However, its interaction with the environment is limited. Protected by a cell wall or membrane with relatively few channels, its sensitivity and reactivity are far less than that of a complex creature. Its regulation relies on simpler feedback loops responding to immediate chemical gradients or physical pressures. It actively maintains itself, but its engagement with the world is basic. On the energy landscape, it occupies an intermediate position: actively maintaining itself out of equilibrium, perhaps in a shallow energy well or on a small plateau (see Figure 5), more stable than a creature on a precipice, but far less intrinsically stable than the rock. Complex self-modeling isn't obviously required for this level of persistence.

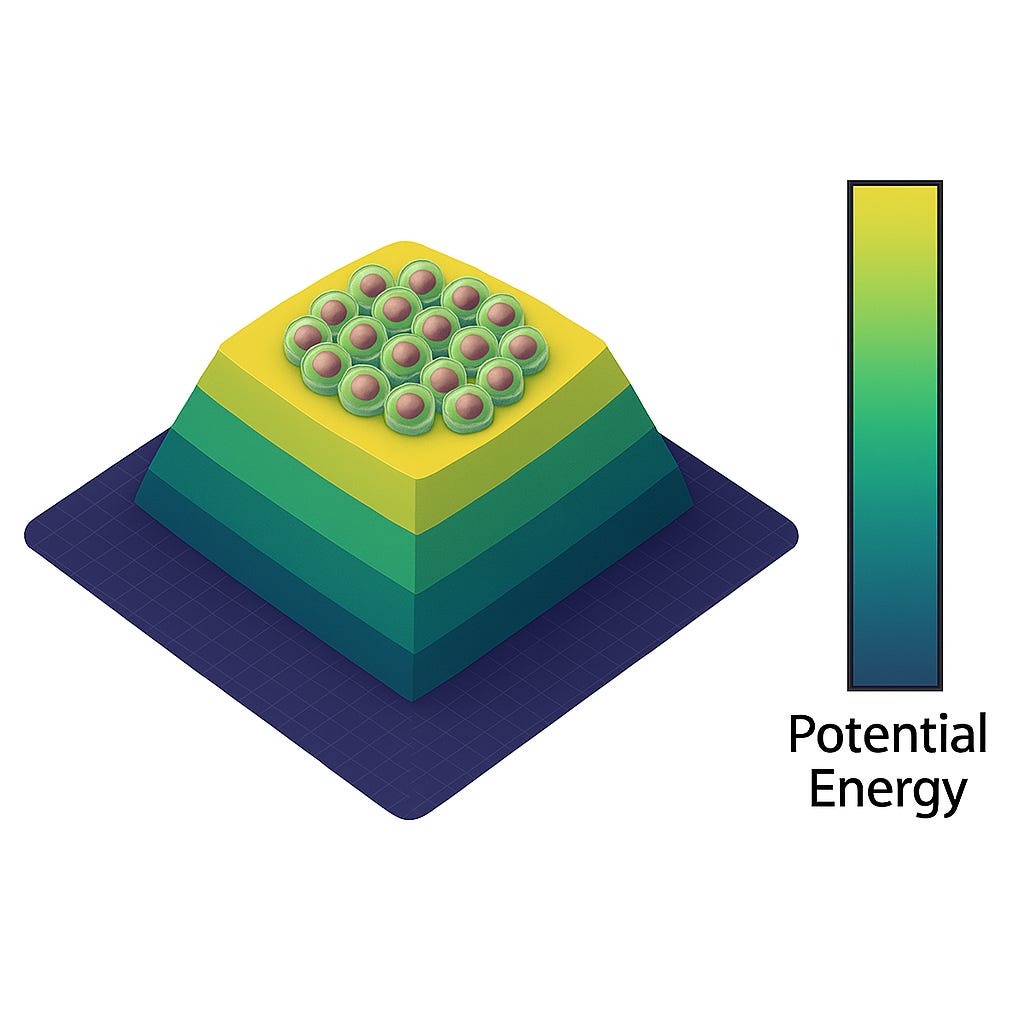

3. The Cell Within an Organism: Think about a cell within a multicellular organism—say, one in your fingertip. Like the lone cell, it is inherently fragile, its complex internal order constantly threatened. It too must actively maintain itself. But its immediate environment is dramatically different. It is not directly exposed to the chaotic external world but is bathed in the highly regulated, buffered internal milieu of the organism. Neighboring cells, controlled fluids, and precise signals create a zone of exceptional stability for the cell. While the cell itself still possesses high potential energy (representing its complex order), the organism actively reshapes the local energy landscape around it, creating metaphorical terraces and retaining walls that prevent catastrophic slides into disorder (see Figure 6). This cell's persistence relies heavily on the regulatory activity of the larger system it inhabits.

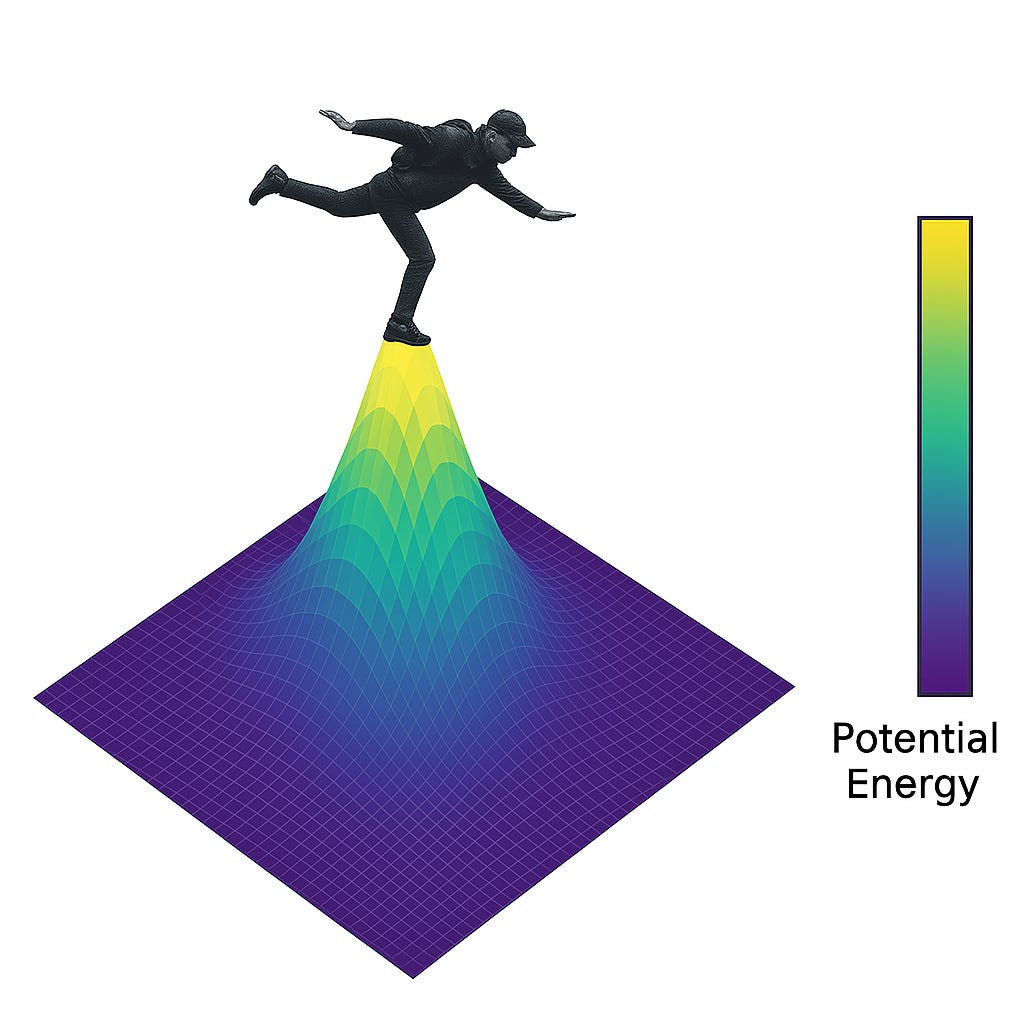

4. The Multicellular Organism (e.g., Human): Finally, consider the multicellular organism as a whole. It represents the pinnacle of active persistence coupled with extreme environmental interaction. Built from inherently fragile components (like the cell in Case 3), the organism must maintain a stable internal environment while navigating a complex, often unpredictable external world through a sophisticated nervous system. Its structure is vastly improbable, its energy state high and far from equilibrium. Unlike the single cell with its limited channels, the organism possesses myriad sensors and effectors, making it acutely sensitive and highly reactive to environmental perturbations. This requires constant, active regulation on a massive scale. On the energy landscape, the organism is like an acrobat balanced precariously on a high peak (see Figure 7), requiring continuous, intricate adjustments (informed by complex internal models) to avoid tumbling into the abyss of thermodynamic equilibrium and dissipation.

These four cases highlight crucial distinctions in the strategies for persistence. The rock is passive. The single cell is active but limited in its environmental interaction and therefore in its need for regulatory complexity. The cell within an organism benefits from the organism's stability. The complex organism itself combines inherent fragility with intense environmental interaction, demanding the most sophisticated active regulation.

This active self-maintenance demands effective regulation. To persist, a fragile organism needs mechanisms to keep itself stable against environmental perturbations. A crucial insight here comes from cybernetics: Conant and Ashby’s Good Regulator Theorem9 states that any effective regulator must contain, or be, a model of the system it controls (including itself against its environment). This need for self-modeling aligns with contemporary frameworks like Karl Friston's Free Energy Principle10, which views organisms as systems that must minimize surprise or prediction error about their sensory inputs to stay alive—a process inherently requiring internal, predictive models of the world and their own bodies.

Historically, we assumed a relationship between consciousness and language.11 At first glance, this makes sense. One reason the need to distinguish self from world arises is that other agents create similar sensory signals with different consequences for action. In conversation, for example, you attenuate auditory signals from your own voice when it is your turn to speak (so that you can talk smoothly without being distracted by your own voice), but accentuate similar signals when it is your interlocutor’s turn. Without this sort of dynamic, we might expect less need for the added complexity of distinguishing self generated from world generated signals, since they will usually be different.

But careful reflection reveals this to be anthropocentric. The need for self-models that distinguishes self-caused from world-caused sensory consequences arises even for solitary agents navigating a complex physical environment. Consider an organism climbing: the tactile feedback from a planned push-off (self-caused, predictable) must be differentiated from the sensation of the surface unexpectedly crumbling (world-caused, unpredictable), even if the raw signals are momentarily similar. Likewise, the visual system must distinguish the expected optic flow generated by self-movement from the independent motion of an external object, like a falling branch. Failure to make these distinctions—correctly attributing sensory cause—is metabolically costly or outright perilous. Thus, the utility of a self-model capable of generating predictions based on action (efference copies) and attenuating expected feedback to highlight salient external changes is not merely a social adaptation; it's a prerequisite for effective interaction and persistence within any dynamic environment that can produce sensory ambiguity relative to the agent's own activity. This self-modeling imperative, rooted in the basic physics of interaction and survival, predates the complexities often associated with consciousness, such as language or sophisticated social reasoning, hinting at deeper evolutionary origins. Indeed, we cant even rule it out in single celled organisms.

For an organism battling entropy, this implies the necessity of an internal self-model to guide its regulatory actions. Furthermore, Ockham's Razor combined with efficiency considerations suggest the optimal self-model for maintaining overall organismic integrity focuses on the controllable, essential patterns – the macrostate – rather than attempting to track every transient microstate detail. Consciousness, in this view, is intimately tied to this process: it is the subjective aspect of this sophisticated, macrostate-focused homeostatic regulator.

To maintain its own improbable order, the organism's self-model must focus on preserving its stable overall patterns—its essential configuration or macrostate. It achieves this partly by ignoring or averaging over countless transient microscopic details—the specific state or history of every molecule (its microstate). Think of homeostasis: your body maintains a stable temperature (a macrostate) despite constant, tiny fluctuations at the molecular level (microstates). What matters for self-preservation is the overall pattern, not the microscopic chaos. This self-regulation feels holistic and unified.

Conversely, to navigate the external world, the organism’s model must track specific, often unpredictable details in the environment—potential threats, resources, obstacles. Survival depends on reacting to particular events and objects. This interaction with the world feels granular, complex, focused on distinct external things.

This fundamental informational asymmetry—between the internal need to stabilize patterns by discarding micro-details, and the external need to track external micro-details—is the bedrock of our intuition of mind-body dualism. It is the likely answer to Chalmers' meta-problem of why consciousness feels so mysterious. The internal, holistic focus required for self-modeling resonates with our feeling of a unified self (Descartes' res cogitans, or thinking substance). The external, granular focus required for world-modeling resonates with our perception of a divisible, mechanical world (res extensa, or extended substance). This functional distinction therefore creates the feeling that mind and body are different kinds of things, even if they are not made of different substances. Our bafflement doesn't signal spooky metaphysics; it's a cognitive echo of the physical strategies essential for life's persistence.

Figure 4: Though rocks have high potential energy, their strong covalent bonds pose a high energy barrier to dissipation.

Figure 5: How vulnerable a single-celled organism is depends on the species. If it has a highly protective cell wall and lives in a low entropy environment, we might consider it less vulnerable than an animal with a nervous system.

Figure 6: Collective buffering obviates the need for constant entropy reversal and therefore a self model and therefore consciousness.

Figure 7: People are highly ordered and therefore have high potential energies. But their complex nervous systems make them highly sensitive to environmental perturbations, so they balance atop an entropic abyss, constantly needing to resist tendences toward disorder (autopoiesis).

Figure 8: When a fragile entity is in a high entropy environment (represented by the random movements of green atoms), many perturbations to internal order are possible. But irrespective of the initial perturbation path (A or C), homeostasis is restored, “forgetting” physical microstate information by externalising it in the momenta of external atoms.

Major Problems Addressed by the Theory

This functionalist account of our dualistic intuitions has major implications. If consciousness arises from actively maintaining fragile order against entropy, it cannot be the exclusive province of humans or even animals with complex brains. A single-celled organism employing feedback loops to maintain its integrity against the surrounding chaos performs this function. It might therefore possess a rudimentary form of subjective experience – what it feels like to be that striving, persisting entity. As discussed, plausibility here depends on entropic considerations, and especially the need to distinguish self-generated from world generated sensations.

The theory also offers potential solutions to long-standing philosophical puzzles:

The Combination Problem: How do the experiences of individual parts combine to form the unified consciousness of the whole? If consciousness is the organizing principle ensuring persistence, then a higher-level system (like an organism) achieves unity by imposing its organizational needs top-down onto its components. It creates a stable internal environment (the "terraced landscape" for the cells within) that coordinates the parts and suppresses their need to run independent self-preservation routines. The potential consciousness of the parts is subsumed by the functional coherence of the whole.

Top-Down Causation: How can an overall organizational pattern exert causal influence? This "top-down causation" operates in any goal-directed system fighting entropy. In homeostasis, for instance, the body's need to maintain 37°C (a macrostate goal) directs lower-level actions like shivering or sweating. This higher-level requirement for stability guides the parts without violating physics. Evidence for such top-down organisational control comes from developmental biology, particularly Michael Levin's work. Tadpole embryos, guided by organism-level bioelectric fields, can correctly reposition transplanted eye cells—the overall blueprint overrides the parts' initial placement. Manipulating these fields can even cause Planarian flatworms to regenerate entirely different head shapes corresponding to species branched from 200 million years ago, or even develop two heads, all without genetic changes! These experiments show that higher-level organisational patterns exert powerful causal influence, shaping the parts to ensure the integrity of the whole. They also show that sophisticated bioelectric computation and pattern control occur even outside traditional nervous systems, using ion channels common across multicellular life for hundreds of millions of years, suggesting the underlying principles of self-modeling for persistence operate more broadly and anciently than often assumed. “Brains,” seen this way, are an anthropocentric abstraction that doesn’t reflect biology. Are these systems conscious? Not like us, that’s for sure, but not at all? Let’s not replicate Descartes’ errors!

Goal-Directedness without Retrocausality: Such goal-directedness doesn't require mysterious backward causation or 'retro-causality.' Unlike closed systems where the past rigidly dictates an increasingly disordered future, living organisms are open systems constantly working in the present to maintain stability into the future (such as survival or equilibrium). Because achieving this future stability is the goal, the need to preserve the overall organizational pattern (the macrostate) directs the current physical components, often 'forgetting' or discarding irrelevant details about past microstates to maintain the target state (as illustrated conceptually in Figure 8). This is NOT magic, because it involves energy intake.

The Impossibility of Philosophical Zombies: Unlike theories treating consciousness as intrinsic or merely epiphenomenal, this view posits feeling independent as a regulatory function that actively maintains fragile systems against dissipation. It is this activity, not implementation details, that is invariant and hence “real.” As discussed, this definition of “real” matches the one used in conventional physics, which derives laws by symmetries. Consequently, philosophical zombies—beings physically identical to us but lacking inner experience—are not conceivable. The function is the experience; the organizational process cannot happen "in the dark."

Phenomenal Experiences Explained

The real test of any theory of consciousness lies in its ability to shed light on the quality of subjective experience – the "what-it's-likeness" of specific states. Viewing consciousness as the feeling associated with active thermodynamic and informational self-maintenance allows us to frame intriguing hypotheses about phenomena ranging from time perception to musical appreciation. Why, for instance, might time often seem to speed up as we age? The thermodynamic framework offers a potential explanation rooted in the brain's management of entropy. To maintain stability across a lifespan, older brains adapt by reducing the volume of novel, high-entropy sensory information they process in detail, effectively filtering more aggressively. This functional shift is analogous to the energy barrier posed by the structure of the rock to environmental perturbations (Figure 4). By processing fewer units of genuinely new or surprising information per second, the stream of consciousness becomes less densely packed with distinct moments, making time feel like it's passing more quickly. This is essentially the outcome of learning. Consider how, when learning to play the piano, each movement, sound, and mistake demands attention — consciousness is saturated with detail. But as skill develops, the brain learns to filter out the irrelevant; performance becomes more fluid, even automatic. Similarly, older individuals have accumulated countless such filtering routines over time — efficient entropy management strategies that streamline experience, at the cost of its felt richness.

Conversely, experiences known to dramatically increase the brain's entropic load, such as the effects of classic psychedelic compounds, often seem to make time slow to a crawl.12 The "entropic brain hypothesis," associated with researchers like Carhart-Harris and Karl Friston and their REBUS model, posits that these substances temporarily disrupt the brain's usual predictive filtering mechanisms. This allows a much larger volume of raw, less predictable sensory information to enter conscious processing – in essence, significantly increasing neural entropy. Within the framework of this essay, such a surge requires a correspondingly massive increase in regulatory activity. This heightened internal effort — the struggle to maintain coherence amidst the flood — could manifest subjectively as an exceptionally dense and intensified conscious experience, leading to the perception that time itself has dilated or stretched out.

As another example, viewing music through the lens of entropy can shed light on our deep emotional reactions to it. When we hear dissonance — combinations of notes that clash or single notes with dissonant soundwaves — what's happening physically is the interaction of sound waves at frequencies that don’t align neatly. These frequencies, often close together but not harmonically related, interfere with one another and produce complex patterns of acoustic "beating" or roughness. Much of this happens at a level below conscious awareness, but our auditory system is remarkably attuned to it. The resulting sound is harder to predict or compress in informational terms — it has higher entropy.13

From an evolutionary perspective, such complex and unstable auditory patterns likely served as alerts to environmental unpredictability: dissonant tones suggest soundwaves from multiple distinct sources — worrying when those sources could be predators! So dissonance might provoke a subtle, built-in unease. In contrast, consonant harmonies — where frequencies align in simple integer ratios — tend to reinforce each other, often generating smooth, predictable overtones that our auditory system interprets as stable and pleasing. This drop in entropy is not just a perceptual simplification; it can feel emotionally satisfying, like a resolution of tension.

In this way, the familiar arc of tension and release in music mirrors a deeper biological impulse: our constant drive to reduce uncertainty, extract order from complexity, and make sense of a noisy world. The pleasure we feel when dissonance resolves into harmony may be more than aesthetic — it may be a small-scale echo of life’s broader thermodynamic struggle against entropy.

But what about specific sensations, like experiencing 'red'? How different this is from simple physical detection: a basic light meter, registering only wavelengths, would report wildly different readings from a stop sign under changing daylight. Yet we perceive that sign as unequivocally 'red' whether in bright noon sun or deep evening shadow. This reliable colour constancy shows that experiencing 'redness' isn't just about passively registering photons (the physical microstate). Instead, it functions as a robust 'software routine' within the organism's regulatory system. This internal process actively discards irrelevant micro-details—the precise mix of wavelengths hitting the retina—to identify a stable, functionally crucial category: 'Stop-Sign Redness'. Crucially, recognizing this category depends not just on the light itself, but its relation to context – the sign's distinct shape, its typical location, its learned meaning ('Stop!'). The characteristic feeling of red, its distinct and unified presence in our awareness, arises precisely because the self-model treats this category as a high-stakes, functionally autonomous signal, essential for organizing perception and guiding action (braking!). Like the feeling of self, the feeling of red is inseparable from its function; it's not some decorative add-on but the subjective way the mechanism itself operates.

Can Computers Be Conscious? Are They Yet?

This thermodynamic perspective, developed partly through the analogy with software, naturally leads us back to the question: Can computers themselves, running sophisticated software, be conscious? While the analogy helps clarify why consciousness might feel non-physical, the thermodynamic framework presented here suggests that current computers, despite their complexity, likely lack the essential ingredients for genuine subjective experience. The core difference lies not in computational power, but in the fundamental relationship between the system and environmental entropy.

Biological consciousness, under this theory, arises from the constant, active struggle to maintain a fragile, low-entropy macrostate against the relentless onslaught of external disorder. Current computers and AI systems, built on the Von Neumann architecture, fundamentally avoid this struggle by design. This architecture relies on a stored program – the software – held as a static, low-entropy pattern within memory (RAM) until fetched. Computation proceeds by sequentially executing these instructions, involving the transfer of carefully managed, low-entropy information back and forth between memory and the CPU via the bus. This entire process necessitates a highly controlled, stable physical environment, shielding the machine’s circuits from the very thermodynamic pressures that biological systems must constantly counteract. They don't need to actively regulate their core existence against environmental chaos (Figure 8) because their operational integrity depends on external stabilization and the processing of predictable, low-entropy internal data streams. Their persistence is akin to the inert rock's passive resistance (Figure 4), enabled by stable construction, rather than the active, dynamic self-preservation of a living organism managing its improbable state. They lack the essential, embodied confrontation with environmental entropy that drives the need for constant, high-stakes self-regulation.

As mentioned earlier, the key insight here is that modern software does not need a self-model, i.e. a model of itself as existing independent of its external environment. To reiterate, a more fitting analogy for modern software is our unconscious processes: these may qualify as “software” in the sense of being multiply realizable, but like computer circuits, they rarely activate spontaneously from environmental randomness. This is because the activation pathways of unconscious processes are either hardwired by evolution, or the brain has learned highly robust activation pathways over time, eliminating false positives. Usually, it’s a combination of both. For example, walking is initially clumsy and conscious, but is learned fast because the basic logic was coded by evolution. Once learned, the walking modules operate across environments, with no need for a “mind” that models the modules as independent of a noisy environment: noise is robustly filtered in this case, as in computers.

Even our most complex software doesn’t have what it takes for consciousness. The behavior of current AI models when faced with continuous, open-ended learning hints at their thermodynamic fragility. In particular, phenomena like "catastrophic forgetting" in neural networks — where learning new information quickly destroys previously acquired knowledge — illustrates how rapidly these systems lose coherence if they are forced to adapt constantly to the high-entropy stream of information encountered by biological organisms, rather than being trained in discrete, controlled phases. They lack the robust, dynamic mechanisms biological systems evolved to maintain informational integrity over time despite continuous environmental perturbation.

In the end, current AI systems are insulated from the existential imperative to self-model; they do not perform this kind of active, embodied, whole-system regulation against environmental entropy, lacking the functional driver of consciousness. Software almost certainly does not feel its own processing yet, but we can make such software. Alex Orobia’s Free Energy Principle based paradigm of “Mortal Computation” is an interesting direction here.

V. Conclusion: The Feeling of Function

The ancient riddle of animation and the modern 'hard problem' of consciousness begin to look less mysterious when we view them through the lens of thermodynamics. This perspective suggests our intuition—the deep-seated feeling that mind is distinct from matter—isn't evidence of a separate substance. Instead, it arises directly from the physical challenge faced by all fragile living systems: the constant, active struggle to maintain their improbable order against the universe's relentless drift towards entropy.

This view echoes Spinoza's rejection of Cartesian dualism. He proposed a unified reality where Thought and Extension were not separate substances but parallel attributes of one Substance. It also finds parallels in older concepts, particularly Aristotle's hylomorphic view. For Aristotle, objects are a compound of matter (hyle) and organizing form (morphê), where form embodies the object's purpose or function.

Framing consciousness as this dynamic, organizing form—Aristotle's morphê in action—sidesteps the old Cartesian interaction problem (how can a non-physical mind affect a physical body?). It also dissolves the paradox of epiphenomenalism (the idea that consciousness is a useless byproduct). In this functional view, the feeling is integral to the function. The sting of a hot pan isn't a mere add-on decorating the physical event; it is the effective warning signal, a crucial part of the organism's strategy for persistence. Both Spinoza's parallelism and Aristotle's hylomorphism, though different, offer frameworks where mind/form and body/matter are inseparable aspects of reality. The thermodynamic perspective presented here recovers this kind of integration, grounding it in the physics of self-maintenance.

But this essay proposes more than just parallelism or static form. Consciousness, the 'feeling' aspect, corresponds specifically to the system's active self-modeling and the relentless maintenance of its low-entropy macrostate against dissolution. This functional core explains the very bafflement that consciousness inspires—Chalmers' 'meta-problem'. The feeling of being separate stems from the fundamentally different thermodynamic and informational strategies required to maintain a stable self (modeling the holistic macrostate by necessarily discarding microstate details) versus navigating a chaotic world (tracking specific, granular external events).

Crucially, this self-model isn't just parallel to the physical state, as in Spinoza's system; it is the functionally essential, higher-level control logic. It's the level that is invariant across microstate fluctuations, the level crucial for explaining the system's persistence.

Understanding this functional necessity reframes the 'hard problem'. It starts to look less like a deep metaphysical puzzle and more like a category error born from our own perspective as complex, self-preserving systems. Consciousness is revealed not as an ethereal mist floating above the machinery, but as the intrinsic feeling of that machinery actively organizing itself against the constant threat of dissolution. It’s a modern echo, perhaps, of Aristotle and Spinoza, but one grounded in the thermodynamics of information and life, assigning explanatory priority to the functional level.

The vividness and "what-it's-likeness" of subjective experience, therefore, are not mere decoration. They are inseparable from the high stakes of existence, the constant effort required to stay whole. Pain hurts because hurting is an effective, indispensable signal within a system designed to avoid damage and persist. The feeling cannot be detached from the function.

This functional requirement also suggests why current artificial intelligence likely lacks genuine consciousness. Trained in stable environments, most AI systems don't face the same existential, thermodynamic imperative that shapes biological life. They lack the functional driver—the high-stakes need for macrostate-focused self-regulation against environmental entropy—that necessitates the kind of self-modeling underpinning subjective experience. Building truly conscious machines might require engineering systems that are similarly vulnerable, systems that must actively struggle to maintain their own organized existence against disorder.

Ultimately, understanding consciousness demands that we shed the anthropocentric bias that sets humanity apart from nature. By grounding subjective experience in the universal principles governing fragile, organized matter, we move from seeing mind as a spooky anomaly to recognizing it as a natural, scalable feature of the cosmos. It is the intimate feeling of matter successfully organizing itself, for a time, against the inevitable tide of entropy—the feeling of function, the feeling of life itself.

If we were planet sized, for example, we’d perceive the effects of relativity directly. One perceivable effect would be relativistic length contraction in our extremities when swinging our limbs, because extremities would be moving at a significant fraction of the speed of light.

My point is we should embrace an evolutionary epistemology when approaching the mind, but not uncritically. The sheer persistence of the hard problem suggests roots in natural selection, especially given beliefs in some form of immaterial essences (souls, spirits) are near culturally universal. But the extreme dualism in Western thought is clearly not inevitable, so cultural evolution is also relevant to understanding our difficulties with consciousness.

Performing live vivisections and interpreted animal cries as mechanical noise (possibly apocryphal).

Note the difference with Platonism. Platonism is an essentialist philosophy, positing inherent unchanging characteristics (e.g. the Platonic Form of a worldly triangle exists for eternity). Unfortunately, inherent characteristics are unobservable, making essentialism antithetical to science. Philosophically, functionalism jettisons eternalism, seeing it as the same logical error made by those who believe souls to be eternal: mistaking relative stability for genuine transcendence. The widespread belief that physical laws are inherently more "real" because they're apparently timeless could therefore simply reflect their comparative simplicity—simpler patterns last longer in a universe of finite resources.

The symmetry break in question is scale-invariance: different laws hold at different scales.

Energy and information conservation are equivalent, see subsequent argument on Landauer’s principle.

Physical laws, powerful as they are, are not directly observable 'things' but abstract, highly compressed descriptions of invariant relationships that dictate how matter must behave. For example, symmetry of laws w/r/t translations and rotations in space corresponds to conservation of linear and angular momentum respectively; symmetry w/r/t time corresponds to conservation of energy, symmetry w/r/t inertial frame of reference to special relativity, and so on. r In the same vein, the functional 'laws' or 'software' modeled by a conscious system represent the invariances crucial for its own persistence, abstracted from the flux of its physical microstates. The subjective feeling of consciousness is the system's way of apprehending these vital functional constraints necessary for its regulation. From this perspective, consciousness as the feeling of function is conceptually parallel to physical law as the description of symmetry. Why should one level of emergent description based on essential invariance be considered inherently more 'magical' than the other?

For the reasons outlined earlier,”explained” here has all the caveats from earlier about the ‘Past hypothesis’. If equilibrium is indeed overwhelmingly likely, ala Boltzmann, why is the universe we observe so structured?

Formally, the simplest state to action mapping that minimises the Shannon entropy of the goal state is deterministic. In nontrivial cases, i.e. where different system states affect goal states in different ways, this implies the regulator is a model of the system in question, in the sense that different system states produce unique or near unique regulator states.

This idea is central to predictive processing frameworks like Karl Friston's Free Energy Principle (FEP). FEP posits that self-organising systems maintain their non-equilibrium steady state (i.e., stay alive) by minimizing prediction error ('free energy' or 'surprise') about their sensory inputs. This inherently requires the system to possess generative models of both the external world (to predict sensory causes) and itself (to maintain physiological bounds).

Our friend Descartes being a famous example.

Interestingly, psychedelics have high affinity for 5HT2A receptors, which are more strongly expressed in children, lending support to the previous paragraph suggesting a relationship between brain entropy and time perception.

Specifically, it is hard to compress (it has high/Kolmogorov complexity, which is closely related to Shannon entropy discussed earlier).